CSV sucks

I've said that CSV sucks many times.

I am on record saying publicly that CSV sucks. That video is a lightning talk that I held at Djangocon 2018 in Darmstadt, but I've said that bit before and after.

I advise against using CSV in any situation where you can avoid it. It is a bad format, it has bad usability and you shouldn't use it.

CSV is also not “dead” in any sense of the word. In fact, I would say it is undead, animated by the will of mad wizards, against its will, and it should have died a long time ago.

From time to time, I read from proponents of the CSV format, and most of the time, that's ok. You do you! Stay stuck in the stupid timeline. But sometimes, they just rub me the wrong way. Like it happened yesterday on the front page of HN. Those arguments come from an especially weird place, because they compare CSV to parquet, JSONL, MessagePack and the like, most of which have very different use cases. If you start out with a format that doesn't fit your use case, you're really quite doomed from the startI'd really like to see you encode OpenTelemetry data with nested spans and added records into a CSV file in a sane way.. If the format you choose happens to be a bad format (like CSV), you are beyond help.

Before we get to why CSV is really really actually quite really bad (it will shock you!), let me go through the presented arguments real quick.

1. CSV is dead simple

Yes, sure, except when it isn't. If everyone could just agree on what CSV actually is, then, sure, we might say that it's simple. Still not as simple as the proper solution which I will present to you at the end, but ok, simple enough, I guess. Alas, in the real world it's about as simple as travelling to another country and plugging in your electrical appliances there. Except at least the different plug standards are at least not ALL called the same thing.

Here's the documentation of the CSV reader library in Python. If you think that one counts as “simple”, well, you must be way smarter than meTo be fair, that is possible. Probable even. Alright, alright, you probably are much smarter than me!.

By the way, so is JSONL.

2. CSV is a collective idea

No, it's a collective bad idea. There are much better alternatives (see below) that are well-thought-out, a property that collective ideas famously lack.

To me, that argument boils down to: “millions of raccoons eat garbage, so eat more garbage!”

3. CSV is text

Yes, and that comes with advantages and disadvantages. So let's count that as a neutral point, and compare it with some alternatives below. By the way, so is JSONL.

4. CSV is streamable

Well, yes, maybe. If that's your use case, fantastic. There are still better alternatives, below. By the way, so is JSONL.

5. CSV can be appended to

On the off chance that I may be repeating myself, so are most of the alternatives below. By the way, so is JSONL.

6. CSV is dynamically typed

I am a fan and proponent of dynamic types, and I rather dislike statically typed programsin most situations, where appropriate, depending on use case, etc. etm., but for interfaces, I think you should have the strictest contracts you can make, and make very damn sure that the provider of the interface sticks to the contract. That's the reasoning behind all of the interface description languages, from the most ancient WSDL down to the most modern TypeSpecIT HAS IT RIGHT IN THE NAME!.

For an interface, you must make sure that what you get is what you expect.

Guess how many guarantees CSV gives you? Zero!

There is a beautiful story where you can download the historic Euro exchange rates from the ECB as a CSV fileNote that the first thing this article from CSVbase (of all places) does is convert it to a proper data format., but they mistakenly added a comma at the end of the row, and now they will have to keep that useless comma forever because it might break legacy customers' parsing, all thanks to an under-specified format language.

By the way, so is JSONL.

7. CSV is succinct

Sure, compared to formats where you can have differently-structured objects in each line where you must repeat the keys (again, use case!), CSV is slightly more succinct. Compared to other tabular formats, it is not.

Of course, statically-typed numbers could be represented more concisely, but you will not save up an order of magnitude there neither.

Go download that ECB file from above and say that again!

8. Reverse CSV is still valid CSV

This must be the weirdest argument in the bunch. You can hack your way to reading from the end of a CSV file by “feed the bytes of your file in reverse order to a CSV parser, then reverse the yielded rows and their cells' bytes and you are done (maybe read the header row before though)”?!? And that is supposed to be simpler than scanning for newlines (or any record separator) from the end of the file?! Or doing an index scan in any of the grown-up formats? I beg your hecking pardon?

9. Excel hates CSV

The argument of “interoperation with the most widely-used tool is possible, but difficult, and may break in unexpected ways” is not the own you think it is.

Signed: xan, the CSV magician

Look, this is really what it boils down to. I don't want a magician of a data interchange format. I want an interchange format to be well-defined, well-supported by libraries and languages and without surprises nor Stockholm syndrome. I don't want magic and wizardly knowledge just to transfer some rows of data.

The worst thing about it

It was worse than a crime; it was a blunder.

- Louis Antoine, Duke of Enghien

The worst thing about CSV is that it's a hack, and a bad one at that. All of the format difficulties stem from the single fact that we use characters that can appear in the data as structural elements. That means that you must escape strings, you must think about which characters to use as separators (both for fields as well as records) and you have to deal with all the things that can/must occur when someone writes wrongly, because you can't detect it right away, or at all.

Using the same characters in data as in the format is a hack. It's the first thing you think of because it's simple and stupid, and it leads to all kinds of problems down the line.

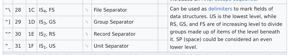

Fortunately, smarter minds have thought about this problem before and have found a much better solution: simply use different characters. Sadly, almost no-one knows about that. Here's an excerpt from the ASCII standard:

from Wikipedia: https://en.wikipedia.org/wiki/C0_and_C1_control_codes

from Wikipedia: https://en.wikipedia.org/wiki/C0_and_C1_control_codes

Here's all the code you need to parse an ASV file, with ascii separated values, in Python:

raw = open("file.asv").read()

for row in file.split("\x30"):

for field in row.split("\x31"):

print(field)

No ifs, ands or butsThe code for the writer is equally simple and left as an exercise for the reader.. That's all, unambiguously. No escaping, no faffing, no nothing. You want to have different data in a single file? Go use the Group Separator and File Separator codes and just stuff it all in there! And done.

If only they'd read the standard.

If only they had just taken just a little time to just think about it instead of going right ahead, we'd have been spared this silly mess. But they didn't just, they won't just, and no one in the history of absolutely everything ever has just.

Alternatives

Alright, enough whining, time for some positive outlook.

Let's only look at the use-case of tabular data with relatively unchanging formats, the occasional empty fieldHow do you code an empty field in CSV?! Do you leave it empty? Is that an empty string right in the middle of your beautiful data? Or is it a null? WHO FLIPPING KNOWS?! notwithstanding. Choose differently for different use-cases: parquet or other columnar data files are for columnar data, JSONL is for varying structures in every line!

ASV

If you have the control, go for ASV! It has all the advantages of CSV, plus none of the silliness. The only bad thing is that editors don't properly show the separator codes (for viewing, a search-and-replace with newline and tab will be sufficient), nor is it super-easy to type them.

SQLite

An even better choice would be SQLite! I mean, if you don't need to rear-feed your files and then invert them, but actually no, even then, because you can do that with range queries. Sure, sure, you can't open it with every text editor, but the tooling is portable and available almost everywhereIf you have python, you can open any SQLite file.; and sure, sure, you need to learn all of these two SQL commands: select * from table; and insert into table values (...). But you'll manage, and for these herculean efforts you get proper data integrity, proper data types (if you want, SQLite can be flexible if you need it to be), multiple tables in one file, proper binary packing and random access scanning and full SQL querying.

Protobuf

On the simpler, more machine-oriented end of the range, there's Protobuf. If you have more than a few fields, the gains from not storing numbers as text is massive! For transferring some data between API endpoints, Protobuf is a great choice!

You can download OpenStreetmap data in Protobuf format.

Summary

Don't delude yourself!

CSV bad.

Other formats better.

Choose wisely!